I was interested in trying the new FLUX image generation models by Black Forest Labs, locally of course.

Alas, the FLUX[dev] model has 12 billion parameters plus the text encoders, requiring a priori more than 24 GB of VRAM, double of what my GeForce RTX 3060 GPU provides.

Fortunately, the models have been successfully quantized, for example using the 4-bit NormalFloat data type in the stable-diffusion-webui-forge repository project, significantly reducing the amount of video memory required.

Sample output for the prompt “FLUX written on a shipping container”.

Running stable-diffusion-webui-forge in Docker

The README of the stable-diffusion-webui-forge repository only mentions Windows, but Linux support is also implemented, and it is relatively easy to run the server from a Docker container (to ensure the right dependencies without having to pollute the host).

Save the following in a Dockerfile:

FROM nvidia/cuda:12.4.1-cudnn-runtime-ubuntu22.04

RUN apt-get update && apt-get install -y python3 python3-venv git google-perftools bc libgl1

RUN git config --global --add safe.directory /app

VOLUME /app

EXPOSE 7860

ENTRYPOINT ["/app/webui.sh", "-f", "--listen", "--port", "7860", "--ckpt-dir", "/app/checkpoints"]

Upon first execution, the webui.sh script creates a virtual environment, installs additional dependencies, and clones repositories (see webui.sh and launch_utils.py). Ideally, we would at build time copy the source code of the application and execute webui.sh to bake these dependencies in the image. However, this is not completely trivial as it requires changing the default Docker runtime so that the GPU is visible on docker build and the right dependencies are pulled. The scripts also save configuration directly in the app root, which would make persisting them difficult:

$ ls -l | rg root

drwxr-xr-x - root __pycache__

drwxr-xr-x - root cache

.rw-r--r-- 1.1k root config.json

drwxr-xr-x - root config_states

drwxr-xr-x - root outputs

.rw-r--r-- 269 root params.txt

drwxr-xr-x - root repositories

.rw-r--r-- 82k root ui-config.json

drwxr-xr-x - root venv

Instead, we simply will mount the source code on /app, which will contain the additional data after execusion.

$ # Build the Docker image

$ docker build -t forge .

$ # Clone the repository

$ cd /data

$ git clone https://github.com/lllyasviel/stable-diffusion-webui-forge

$ # Start a container

$ docker run --name forge --rm --gpus all -p 7860:7860 \

-v /data/stable-diffusion-webui-forge:/app stable-diffusion-webui-forge

The NVIDIA Docker runtime is of course required so that the GPUs are available inside the container.

The Gradio UI should then be accessible on http://localhost:7860/.

The data (dependencies, models, configuration) will be persisted if the container is restarted.

Preparing FLUX[dev]

The NF4-quantized FLUX[dev] model can be downloaded at this location, following this Github discussion. The .safetensors file should simply be extracted in the checkpoints folder of the repository.

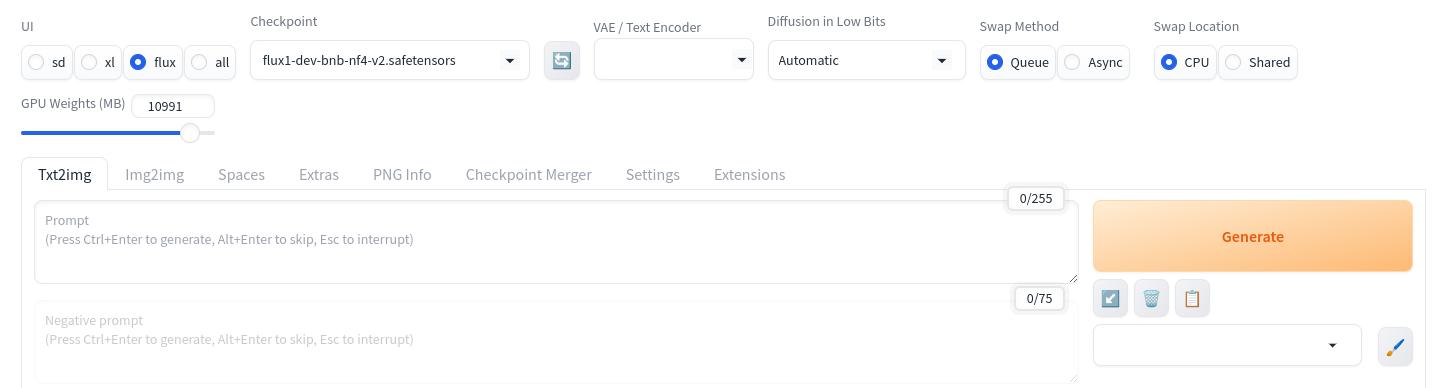

It then suffices to select the checkpoint, “flux” at the top of the UI, and enter a prompt.

The outputs are saved in the output folder of the repository (accessible on the host).

Four sample outputs from FLUX[dev].

Benchmarking

The logs of one generation look like this:

[GPU Setting] You will use 91.48% GPU memory (10991.00 MB) to load weights, and use 8.52% GPU memory (1024.00 MB) to do matrix computation.

Loading Model: {'checkpoint_info': {'filename': '/app/checkpoints/flux1-dev-bnb-nf4-v2.safetensors', 'hash': 'f0770152'}, 'additional_modules': [], 'unet_storage_dtype': None}

[Unload] Trying to free all memory for cuda:0 with 0 models keep loaded ... Done.

StateDict Keys: {'transformer': 1722, 'vae': 244, 'text_encoder': 198, 'text_encoder_2': 220, 'ignore': 0}

Using Detected T5 Data Type: torch.float8_e4m3fn

Using Detected UNet Type: nf4

Using pre-quant state dict!

Working with z of shape (1, 16, 32, 32) = 16384 dimensions.

K-Model Created: {'storage_dtype': 'nf4', 'computation_dtype': torch.bfloat16}

Model loaded in 0.9s (unload existing model: 0.2s, forge model load: 0.7s).

Skipping unconditional conditioning when CFG = 1. Negative Prompts are ignored.

[Unload] Trying to free 7725.00 MB for cuda:0 with 0 models keep loaded ... Done.

[Memory Management] Target: JointTextEncoder, Free GPU: 10963.38 MB, Model Require: 5154.62 MB, Previously Loaded: 0.00 MB, Inference Require: 1024.00 MB, Remaining: 4784.76 MB, All loaded to GPU.

Moving model(s) has taken 2.02 seconds

Distilled CFG Scale: 3.5

[Unload] Trying to free 9411.13 MB for cuda:0 with 0 models keep loaded ... Current free memory is 5663.55 MB ... Unload model JointTextEncoder Done.

[Memory Management] Target: KModel, Free GPU: 10897.91 MB, Model Require: 6246.84 MB, Previously Loaded: 0.00 MB, Inference Require: 1024.00 MB, Remaining: 3627.07 MB, All loaded to GPU.

Moving model(s) has taken 1.57 seconds

100%|##########| 20/20 [01:04<00:00, 3.24s/it]

[Unload] Trying to free 4495.77 MB for cuda:0 with 0 models keep loaded ... Current free memory is 4410.01 MB ... Unload model KModel Done.

[Memory Management] Target: IntegratedAutoencoderKL, Free GPU: 10895.94 MB, Model Require: 159.87 MB, Previously Loaded: 0.00 MB, Inference Require: 1024.00 MB, Remaining: 9712.07 MB, All loaded to GPU.

Moving model(s) has taken 3.15 seconds

Total progress: 100%|██████████| 20/20 [01:06<00:00, 3.34s/it]

We see the text encoder model requiring 5 GB of VRAM, and FLUX itself 6 GB.

The FLUX models were trained on multiple resolutions and aspect ratios. The following very reasonable timings can be observed on my 3060:

| Resolution | Time |

|---|---|

| 896 x 1152 (default) | 01:13 |

| 1024 x 1024 | 01:10 |

| 512 x 512 | 00:22 |

| 256 x 256 | 00:10 |

The stable-diffusion-webui-forge repository contains instructions on how to potentially speed this up.